Releases: IBMStreams/streamsx.messagehub

IBM Message Hub Toolkit v1.9.2

What's new in this toolkit release

This release corrects following Bugs in the underlying Kafka toolkit, which effect all previous MessageHub toolkit versions since 1.9.0:

- IBMStreams/streamsx.kafka#155 (CrKafkaConsumerGroupClient goes into wrong state and can lose tuples after reset of CR)

- IBMStreams/streamsx.kafka#153 (expand relative paths in Kafka properties). Note, that this correction is most likely not relevant for the MessageHub toolkit.

You find the SPL documentation online at https://ibmstreams.github.io/streamsx.messagehub/docs/user/SPLDoc/

IBM Message Hub Toolkit v1.9.1

What's new in this toolkit release

This release has following changes and new features:

- This toolkit is based on the new Kafka toolkit v1.9.1

- The following improvements are done in the underlying Kafka toolkit:

- improved low memory detection for the consumer when records are queued in the consumer

- No adjustment of retries producer configuration to 10, as long as consistent region policy is not

Transactional. The version 2.1.1 producer's default retries config (2,147,483,647) is used when retries is not specified.

You find the SPL documentation online at https://ibmstreams.github.io/streamsx.messagehub/docs/user/SPLDoc/

IBM Message Hub Toolkit v1.9.0

What's new in this toolkit release

This release has following changes and new features:

-

This toolkit is based on the new Kafka toolkit v1.9.0

-

The Kafka client has been upgraded to version 2.1.1. For the consumer and producer configuration, please consult the Consumer Configs or Producer Configs section of the Apache Kafka 2.1 documentation.

-

The MessageHubProducer can now optionally be flushed after a fixed tuple count. Please review the new optional flush parameter in the SPL documentation.

Bug fixes:

- Fixed a bug in the underlying Kafka-Toolkit: IBMStreams/streamsx.kafka#148

You find the SPL documentation online at https://ibmstreams.github.io/streamsx.messagehub/docs/user/SPLDoc/

IBM Message Hub Toolkit v1.8.0

What's new in this toolkit release

This release is based on the Kafka toolkit version 1.8.0 and inherits following changes and new features from the Kafka-Toolkit:

-

The MessageHubConsumer can subscribe dynamically to multiple topics by specifying a regular expression using the new pattern parameter. Assignment of partitions happens for matching topics at the time of periodic check. When someone creates a new topic with a name that matches, a rebalance will happen almost immediately and the consumers will start consuming from the new topic.

-

The control port of the MessageHubConsumer allows assignment with default fetch position. New SPL functions and SPL types to generate the JSON string for the control port have been added.

-

For the MessageHubConsumer, you can now specify a time period for committing offsets when the operator is used in an autonomous region. Use the new commitPeriod parameter. In previous versions, you could specify only a tuple count, after which offsets are committed.

-

The time policy for offset commit is now the default policy of the KafkaConsumer when not in a consistent region. The time policy avoids too high commit request rates, which can occur with count based policy and high tuple rates. The default commit interval is 5 seconds.

-

The operators can now be configured with a

config checkpoint:clause when used in an autonomous region. The MessageHubProducer operator simply ignores the config instead of throwing an error at compile time. The MessageHubConsumer operator can be configured with operator driven and periodic checkpointing. Checkpointing is in effect when the operator is configured with the optional input port. Then, the operator checkpoints the assigned partitions. On reset, the assigned partitions are restored, and the consumer resumes fetching at last committed offset.

Bug fixes:

The following fixes affect the SPL documentation:

- #79 Final punctuation at input port of MessageHubConsumer makes the Consumer stop submitting tuples.

- #80 Wrong SPLDoc for control port of MessageHubConsumer

You find the SPL documentation online at https://ibmstreams.github.io/streamsx.messagehub/docs/user/SPLDoc/

IBM Message Hub Toolkit v1.7.4

This bugfix release contains following fixes

- #77 trace of cloud service credentials at INFO level

This release is based on the Kafka toolkit v1.7.3.

The downloadable builds are built with IBM Streams 4.3.0.0 on RHEL 7.

IBM Message Hub Toolkit v1.7.3

This bugfix release contains following fixes

- #75 New compression.type setting potentially breaks existing non-Streams consumers

The producer property compression.type is not set by default any more. Release 1.6.0 to 1.7.2 had lz4 as the default value.

IBM Message Hub Toolkit v1.7.2

This bugfix release contains following fixes

- #73 Change trace level for metrics dump

- Improved exception handling for the consumer

IBM Message Hub Toolkit v1.7.1

This bugfix release is based on the Kafka bugfix release v1.7.1, which fixes following issues:

- IBMStreams/streamsx.kafka#110 - Offsets are committed during partition rebalance within a consumer group, more precisely before partition revocation

- IBMStreams/streamsx.kafka#136 - KafkaConsumer shows old metric value for "<topic-partition>:records-lag". partition related custom metrics, which are not valid for an operator any more now show -1 instead of keeping their last value.

IBM Message Hub Toolkit v1.7.0

What's new in this release

This release has following changes and new features:

- The default value of the commitCount parameter of the MessageHubConsumer has changed from 500 to 2000.

- Both operators of the MessageHub toolkit hav a new optional parameter credentials, which can be used to specify the service credentials in JSON as an SPL expression (#68) .

- The toolkit contains SPL types for standard messages (#15).

- MessageType.StringMessage

- MessageType.BlobMessage

- MessageType.ConsumerMessageMetadata

- MessageType.TopicPartition

IBM Message Hub Toolkit v1.6.0

What's new

This release of the MessageHub toolkit is based on the Kafka toolkit version 1.6.0 and inherits following new features from this release:

MessageHubProducer operator

1. Metric reporting

The Kafka producer in the client collects various performance metrics. A subset has been exposed as custom metrics to the operator - issue #112 in the Kafka toolkit.

| Custom Metric name | Description |

|---|---|

| connection-count | The current number of active connections. |

| compression-rate-avg | The average compression rate of record batches (as percentage, 100 means no compression). |

| topic:compression-rate | The average compression rate of record batches for a topic (as percentage, 100 means no compression). |

| record-queue-time-avg | The average time in ms record batches spent in the send buffer. |

| record-queue-time-max | The maximum time in ms record batches spent in the send buffer. |

| record-send-rate | The average number of records sent per second. |

| record-retry-total | The total number of retried record sends |

| topic:record-send-total | The total number of records sent for a topic. |

| topic:record-retry-total | The total number of retried record sends for a topic |

| topic:record-error-total | The total number of record sends that resulted in errors for a topic |

| records-per-request-avg | The average number of records per request. |

| requests-in-flight | The current number of in-flight requests awaiting a response. |

| request-rate | The number of requests sent per second |

| request-size-avg | The average size of requests sent. |

| request-latency-avg | The average request latency in ms |

| request-latency-max | The maximum request latency in ms |

| batch-size-avg | The average number of bytes sent per partition per-request. |

| outgoing-byte-rate | The number of outgoing bytes sent to all servers per second |

| bufferpool-wait-time-total | The total time an appender waits for space allocation. |

| buffer-available-bytes | The total amount of buffer memory that is not being used (either unallocated or in the free list). |

2. Default producer configs

Previous releases of the toolkit have used the Kafka default producer configs unless otherwise configured by the user. For optimum throughput these settings had to be tuned. Now, the important producer configs have default values, which result in higher throughput to the broker and reliability - issue #113 in the Kafka toolkit:

| Property name | Kafka default | New operator default |

|---|---|---|

| retries | 0 |

10. When 0 is provided as retries and consistentRegionPolicy parameter is Transactional retries is adjusted to 1. |

| compression.type | none |

lz4 |

| linger.ms | 0 |

100 |

| batch.size | 16384 |

32768 |

| max.in.flight.requests.per.connection | 5 |

1 when guaranteeOrdering parameter is true, limited to 5 when provided and consistentRegionPolicy parameter is Transactional, or 10 in all other cases. |

3. New optional operator parameter guaranteeOrdering

If set to true, the operator guarantees that the order of records within a topic partition is the same as the order of processed tuples when it comes to retries. This implies that the operator sets the max.in.flight.requests.per.connection producer config automatically to 1 if retries are enabled, i.e. when the retries config is unequal 0, what is the operator default value.

If unset, the default value of this parameter is false, which means that the order can change due to retries.

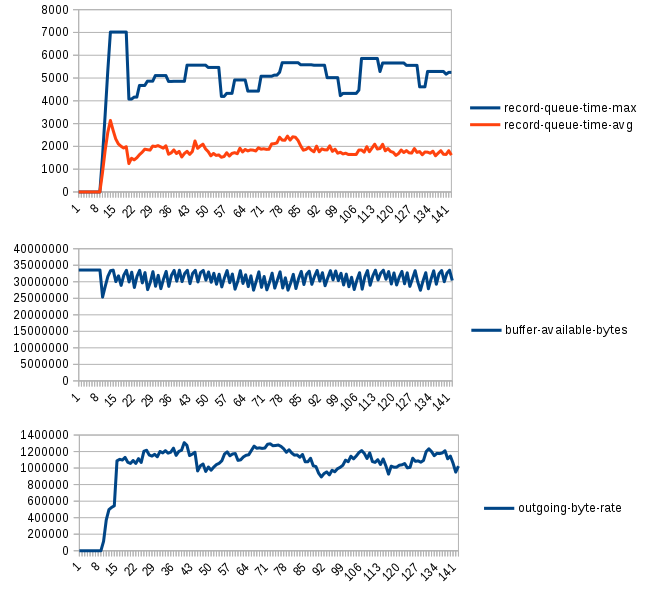

4. Queue time control

In previous releases including 1.5.1, the producer operator was easily damageable when the producer did not come up transferring the data to the broker nodes. Then records stayed too long in the accumulator (basically a buffer), what caused timeouts with subsequent operator restarts - issue #128 in the Kafka toolkit.

The producer now has an adaptive control that monitors several producer metrics and flushes the producer on occasion to stabilize the maximum queue time of records around 5 seconds, see figure below. This enhancement addresses the robustness of the producer operator.

MessageHubConsumer operator

1. Metric reporting

The Kafka consumer in the client collects various performance metrics. A subset has been exposed as custom metrics to the operator:

| Custom Metric name | Description |

|---|---|

| connection-count | The current number of active connections. |

| incoming-byte-rate | The number of bytes read off all sockets per second |

| topic-partition:records-lag | The latest lag of the partition |

| records-lag-max | The maximum lag in terms of number of records for any partition in this window |

| fetch-size-avg | The average number of bytes fetched per request |

| topic:fetch-size-avg | The average number of bytes fetched per request for a topic |

| commit-rate | The number of commit calls per second |

| commit-latency-avg | The average time taken for a commit request |

One of the most interesting metric is the record lag for every consumed topic partition. The lag is the difference between the offset of the last inserted record and current reading position.