|

532 | 532 | "\n", |

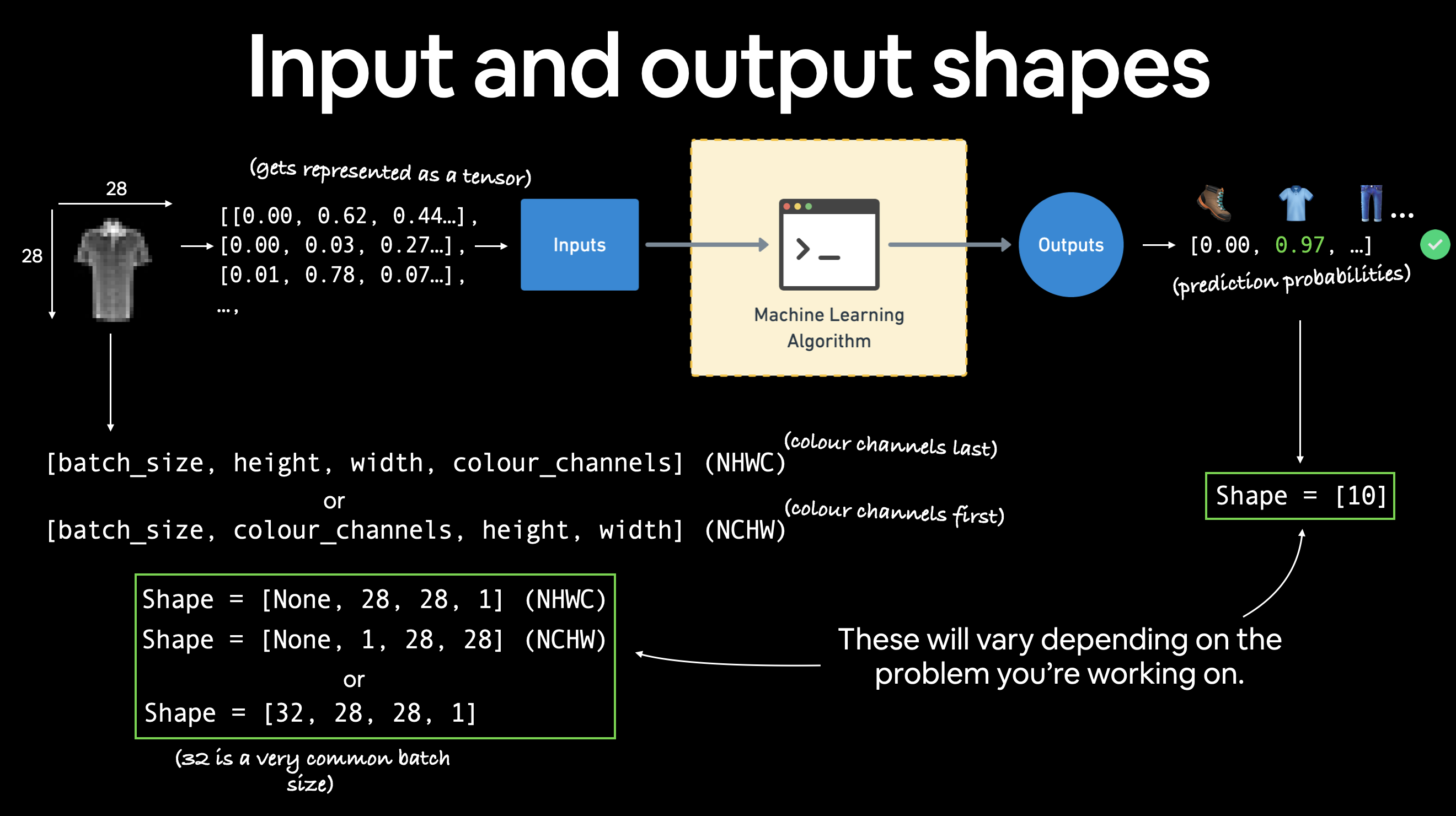

533 | 533 | "*Various problems will have various input and output shapes. But the premise remains: encode data into numbers, build a model to find patterns in those numbers, convert those patterns into something meaningful.*\n", |

534 | 534 | "\n", |

535 | | - "If `color_channels=3`, the image comes in pixel values for red, green and blue (this is also known a the [RGB color model](https://en.wikipedia.org/wiki/RGB_color_model)).\n", |

| 535 | + "If `color_channels=3`, the image comes in pixel values for red, green and blue (this is also known as the [RGB color model](https://en.wikipedia.org/wiki/RGB_color_model)).\n", |

536 | 536 | "\n", |

537 | 537 | "The order of our current tensor is often referred to as `CHW` (Color Channels, Height, Width).\n", |

538 | 538 | "\n", |

|

802 | 802 | "\n", |

803 | 803 | "But I think coding a model in PyTorch would be faster.\n", |

804 | 804 | "\n", |

805 | | - "> **Question:** Do you think the above data can be model with only straight (linear) lines? Or do you think you'd also need non-straight (non-linear) lines?" |

| 805 | + "> **Question:** Do you think the above data can be modeled with only straight (linear) lines? Or do you think you'd also need non-straight (non-linear) lines?" |

806 | 806 | ] |

807 | 807 | }, |

808 | 808 | { |

|

999 | 999 | "\n", |

1000 | 1000 | "Our baseline will consist of two [`nn.Linear()`](https://pytorch.org/docs/stable/generated/torch.nn.Linear.html) layers.\n", |

1001 | 1001 | "\n", |

1002 | | - "We've done this in a previous section but there's going to one slight difference.\n", |

| 1002 | + "We've done this in a previous section but there's going to be one slight difference.\n", |

1003 | 1003 | "\n", |

1004 | 1004 | "Because we're working with image data, we're going to use a different layer to start things off.\n", |

1005 | 1005 | "\n", |

|

1430 | 1430 | " # 1. Forward pass\n", |

1431 | 1431 | " test_pred = model_0(X)\n", |

1432 | 1432 | " \n", |

1433 | | - " # 2. Calculate loss (accumatively)\n", |

| 1433 | + " # 2. Calculate loss (accumulatively)\n", |

1434 | 1434 | " test_loss += loss_fn(test_pred, y) # accumulatively add up the loss per epoch\n", |

1435 | 1435 | "\n", |

1436 | 1436 | " # 3. Calculate accuracy (preds need to be same as y_true)\n", |

|

1578 | 1578 | "\n", |

1579 | 1579 | "Now let's setup some [device-agnostic code](https://pytorch.org/docs/stable/notes/cuda.html#best-practices) for our models and data to run on GPU if it's available.\n", |

1580 | 1580 | "\n", |

1581 | | - "If you're running this notebook on Google Colab, and you don't a GPU turned on yet, it's now time to turn one on via `Runtime -> Change runtime type -> Hardware accelerator -> GPU`. If you do this, your runtime will likely reset and you'll have to run all of the cells above by going `Runtime -> Run before`." |

| 1581 | + "If you're running this notebook on Google Colab, and you don't have a GPU turned on yet, it's now time to turn one on via `Runtime -> Change runtime type -> Hardware accelerator -> GPU`. If you do this, your runtime will likely reset and you'll have to run all of the cells above by going `Runtime -> Run before`." |

1582 | 1582 | ] |

1583 | 1583 | }, |

1584 | 1584 | { |

|

1855 | 1855 | "\n", |

1856 | 1856 | "We'll do so inside another loop for each epoch.\n", |

1857 | 1857 | "\n", |

1858 | | - "That way for each epoch we're going a training and a testing step.\n", |

| 1858 | + "That way, for each epoch, we're going through a training step and a testing step.\n", |

1859 | 1859 | "\n", |

1860 | 1860 | "> **Note:** You can customize how often you do a testing step. Sometimes people do them every five epochs or 10 epochs or in our case, every epoch.\n", |

1861 | 1861 | "\n", |

|

1966 | 1966 | "\n", |

1967 | 1967 | "> **Note:** The training time on CUDA vs CPU will depend largely on the quality of the CPU/GPU you're using. Read on for a more explained answer.\n", |

1968 | 1968 | "\n", |

1969 | | - "> **Question:** \"I used a a GPU but my model didn't train faster, why might that be?\"\n", |

| 1969 | + "> **Question:** \"I used a GPU but my model didn't train faster, why might that be?\"\n", |

1970 | 1970 | ">\n", |

1971 | 1971 | "> **Answer:** Well, one reason could be because your dataset and model are both so small (like the dataset and model we're working with) the benefits of using a GPU are outweighed by the time it actually takes to transfer the data there.\n", |

1972 | 1972 | "> \n", |

|

0 commit comments