Hanjung Kim*, Jaehyun Kang*, Hyolim Kang, Meedeum Cho, Seon Joo Kim, Youngwoon Lee

[arXiv][Project][Dataset][BibTeX]

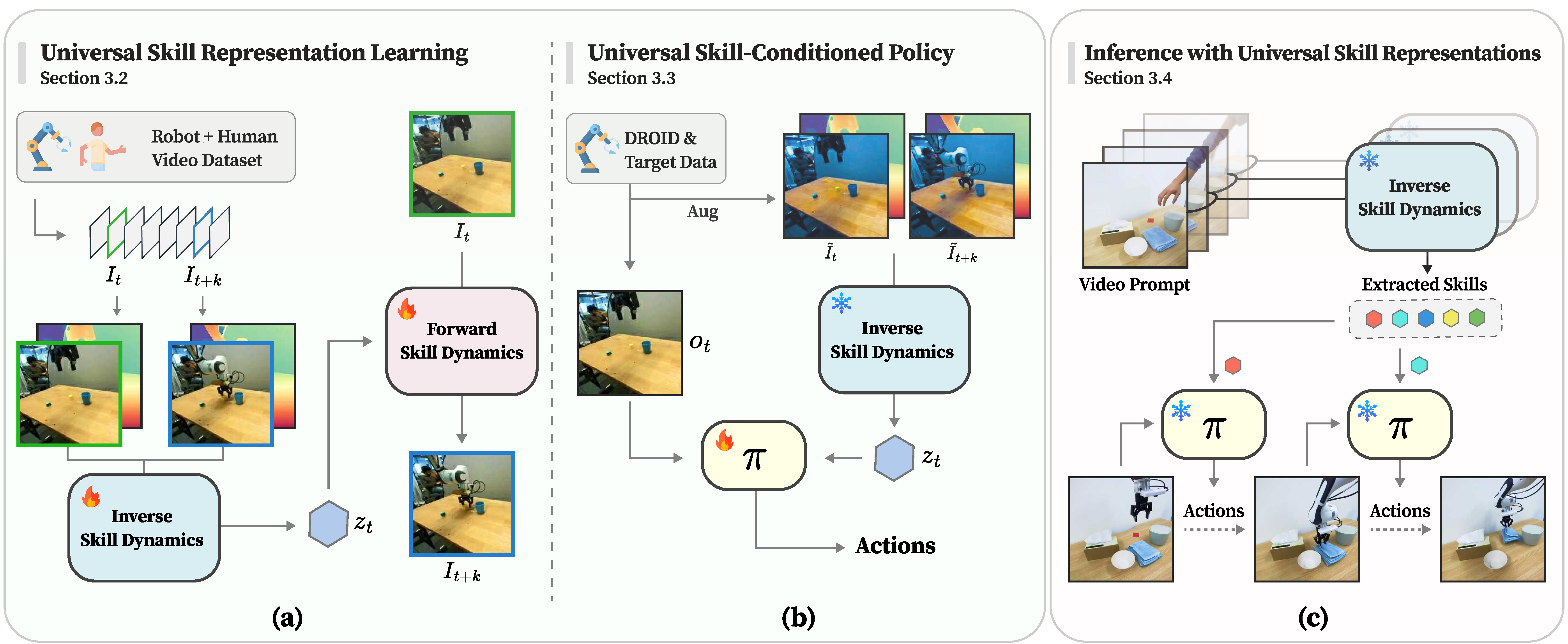

- A universal skill represenation (UniSkill) that enables cross-embodiment imitation by learning from large-scale video datasets, without requiring scene-aligned data between embodiments.

- UniSkill supports skill transfer across agents with different morphologies, incluiding human-to-robot and robot-to-robot adaptation.

- UniSkill leverages large-scale video pretraining to capture shared interaction dynamics, enhancing adaptability to novel settings.

We will be releasing all the following contents:

- FSD & ISD training code

- FSD & ISD checkpoint

- Real-world Dataset

- Skill-Conditioned Policy training & inference code

- Skill-Conditioned Policy checkpoint

- Skill-extraction code

- Linux or macOS with Python ≥ 3.10

- PyTorch ≥ 2.3 and torchvision that matches the PyTorch installation. Install them together at pytorch.org to make sure of this.

pip install -r requirements.txt

For dataset preparation instructions, refer to Preparing Datasets for UniSkill.

We provide the script train_uniskill.py for training UniSkill.

To train the Forward Skill Dynamics (FSD) and Inverse Skill Dynamics (ISD) models of UniSkill, first set up your custom datasets. Once your dataset is ready, run the following command:

cd diffusion

python train_uniskill.py \

--do_classifier_free_guidance \

--pretrained_model_name_or_path timbrooks/instruct-pix2pix \

--allow_tf32 \

--train_batch_size 32 \

--dataset_name {Your Dataset} \

--output_dir {output_dir} \

--num_train_epochs 50 \

--report_name {report_name} \

--learning_rate 1e-4 \

--validation_steps 50For multi-GPU training, first modify the configuration file hf.yaml as needed. Then, run the following command:

accelerate launch --config_file hf.yaml diffusion/train_uniskill.py \

--do_classifier_free_guidance \

--pretrained_model_name_or_path timbrooks/instruct-pix2pix \

--allow_tf32 \

--train_batch_size 32 \

--dataset_name {Your Dataset} \

--output_dir {output_dir} \

--num_train_epochs 50 \

--report_name {report_name} \

--learning_rate 1e-4 \

--validation_steps 50Make sure to replace {Your Dataset}, {output_dir}, and {report_name} with the appropriate values.

@inproceedings{

kim2025uniskill,

title={UniSkill: Imitating Human Videos via Cross-Embodiment Skill Representations},

author={Hanjung Kim and Jaehyun Kang and Hyolim Kang and Meedeum Cho and Seon Joo Kim and Youngwoon Lee},

booktitle={9th Annual Conference on Robot Learning},

year={2025}

}